Document AI joined Snowflake through a startup acquisition with high business impact but limited alignment with Snowflake’s platform, user experience principles, and defined AI strategy. As the first in-house designer dedicated to the product, my responsibility was to guide its evolution from an independent proof-of-concept into a coherent part of Snowflake’s unified AI ecosystem.

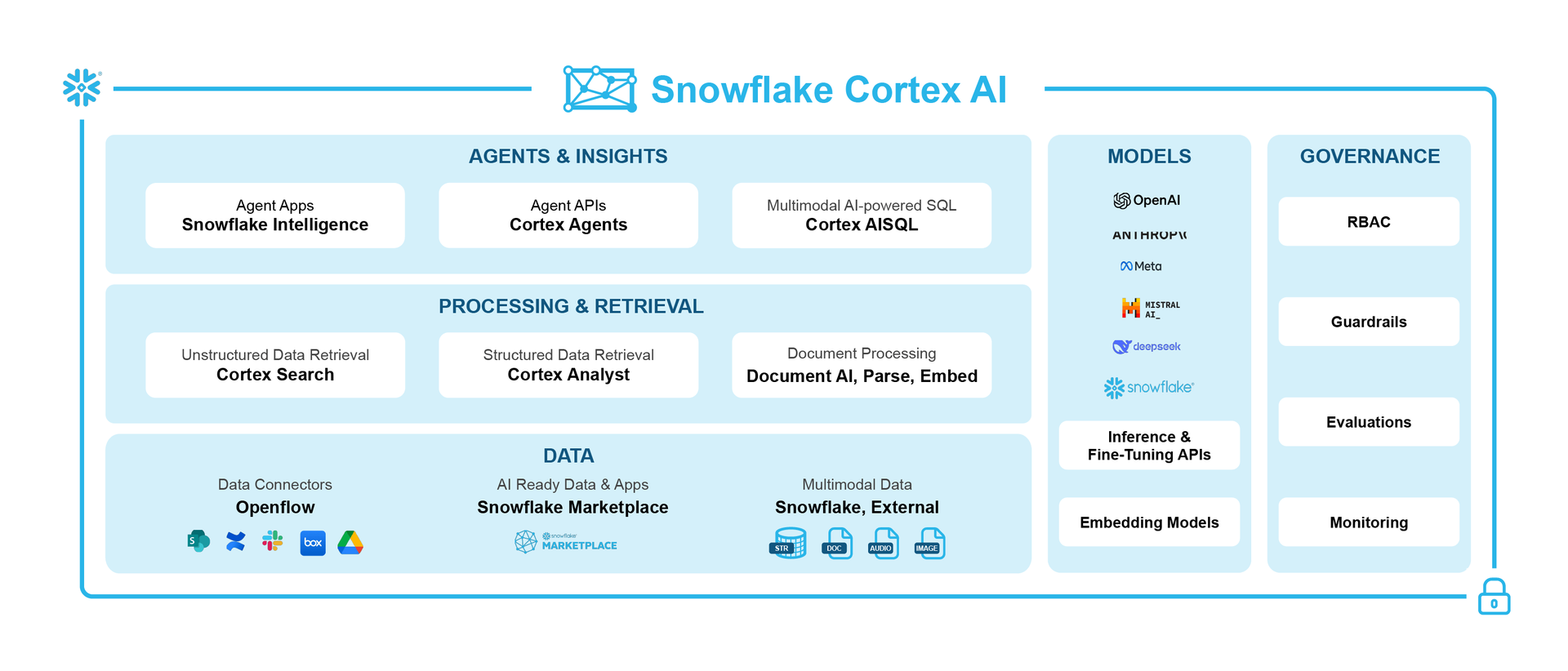

This required understanding not only user needs and system constraints but also how Document AI should coexist with multiple internally developed AI capabilities—some overlapping in purpose. The challenge therefore extended beyond interface design: it demanded ecosystem thinking, cross-functional alignment, and strategic decision-making.

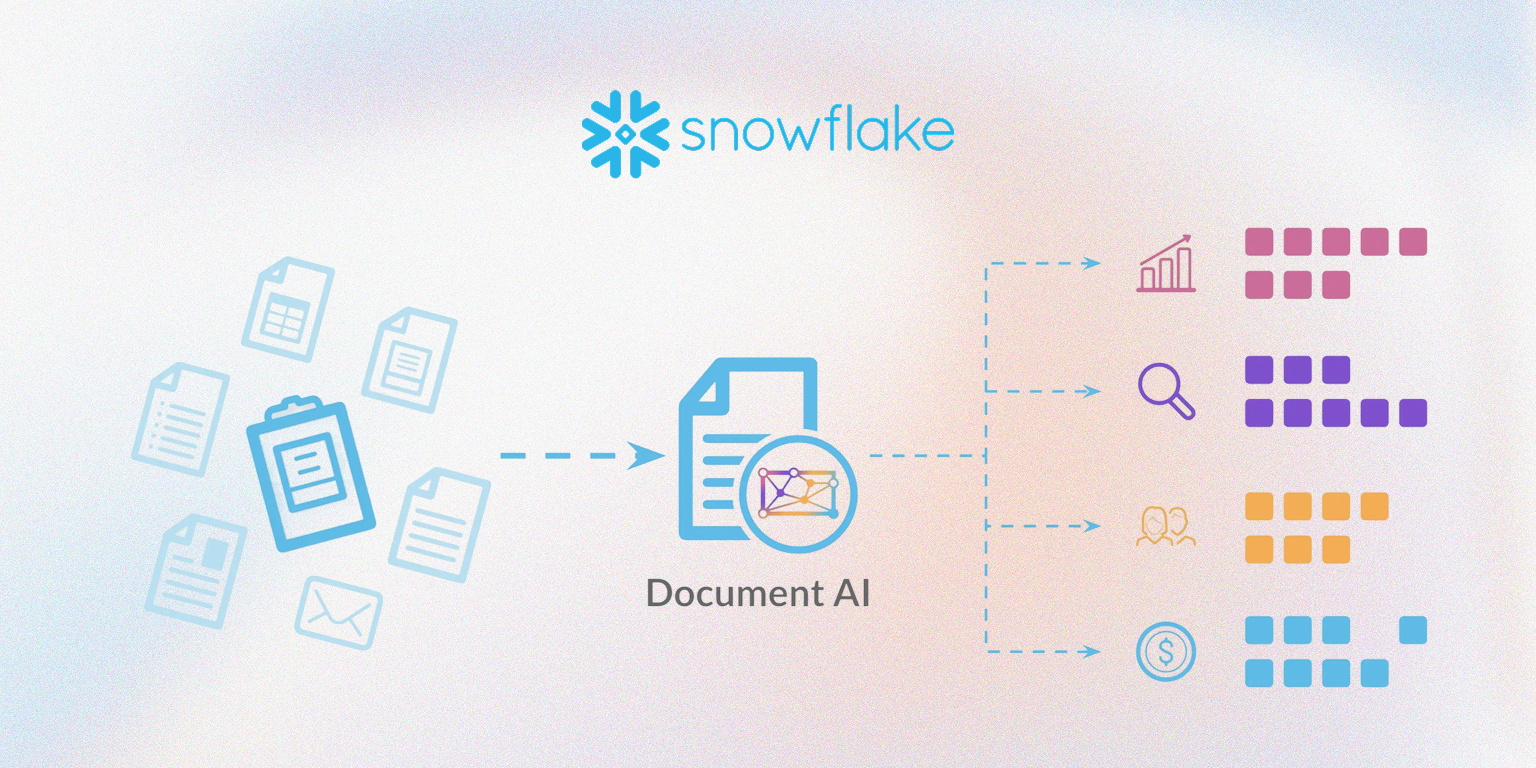

Roughly 80% of enterprise data is unstructured—spread across documents, emails, images, and web pages where critical business context often resides. Yet extracting this information is still slow, manual, and expertise-heavy. Document AI changes this by letting teams validate a small, high-quality sample to generate a model that can reliably process millions or billions of documents. The workflow diagram illustrates this leverage: minimal human labeling produces scalable, production-grade extraction.

One of my earliest responsibilities was defining where Document AI fits within this evolving ecosystem, Cortex AI, the Snowflake AI stack. This included clarifying its functional boundaries, identifying integration points with Snowflake’s platform, and ensuring that we did not duplicate or conflict with adjacent AI efforts.

Document AI also emerged at a time when Snowflake was facing a broader platform challenge. In 2023, the company shipped a record number of General Availability releases, yet users were overwhelmed by the pace of change and consistently reported difficulty even finding—let alone understanding—many of these new capabilities. Senior leadership responded with a company-wide OKR to strengthen feature clarity and platform consistency. As an acquired product with distinct patterns and mental models, Document AI quickly came under the spotlight as a high-visibility initiative—one that needed to integrate seamlessly into the Snowflake ecosystem and demonstrate clear, meaningful usage.

The team entered quarterly planning with misaligned expectations.

1. Leadership prioritized platform consistency and alignment with Snowflake’s design system.

2. Engineering focused on rewriting the front end from Vue to React to reduce technical debt.

3. PM pushed for feature expansion to strengthen competitive differentiation.

4. Design aimed to improve usability and correct ecosystem inconsistencies.

Each direction was valid but incomplete on its own. My role was not to choose sides, but to clarify what problem we were truly solving.

I spent the first weeks understanding technical constraints, product ambitions, and organizational goals. This cross-functional fact-finding—conducted without formal authority—created a neutral, shared understanding of the decision space. It established a foundation for transparent prioritization grounded in evidence rather than preference, which became essential for the next steps of strategic alignment.

To avoid assumption-driven decisions, I established a continuous intake loop with internal and external stakeholders. Weekly sessions with Sales, Solution Engineers, and Account Executives revealed common customer frustrations. Discussions with Public Preview users highlighted workflow inefficiencies and usability gaps.

Quantitative data offered further clarity: document length distribution, extraction failure patterns, correction frequency, and time spent validating results. Across all inputs, one insight consistently surfaced: the human feedback loop—where users corrected model output—generated the highest friction and remained difficult even for advanced users.

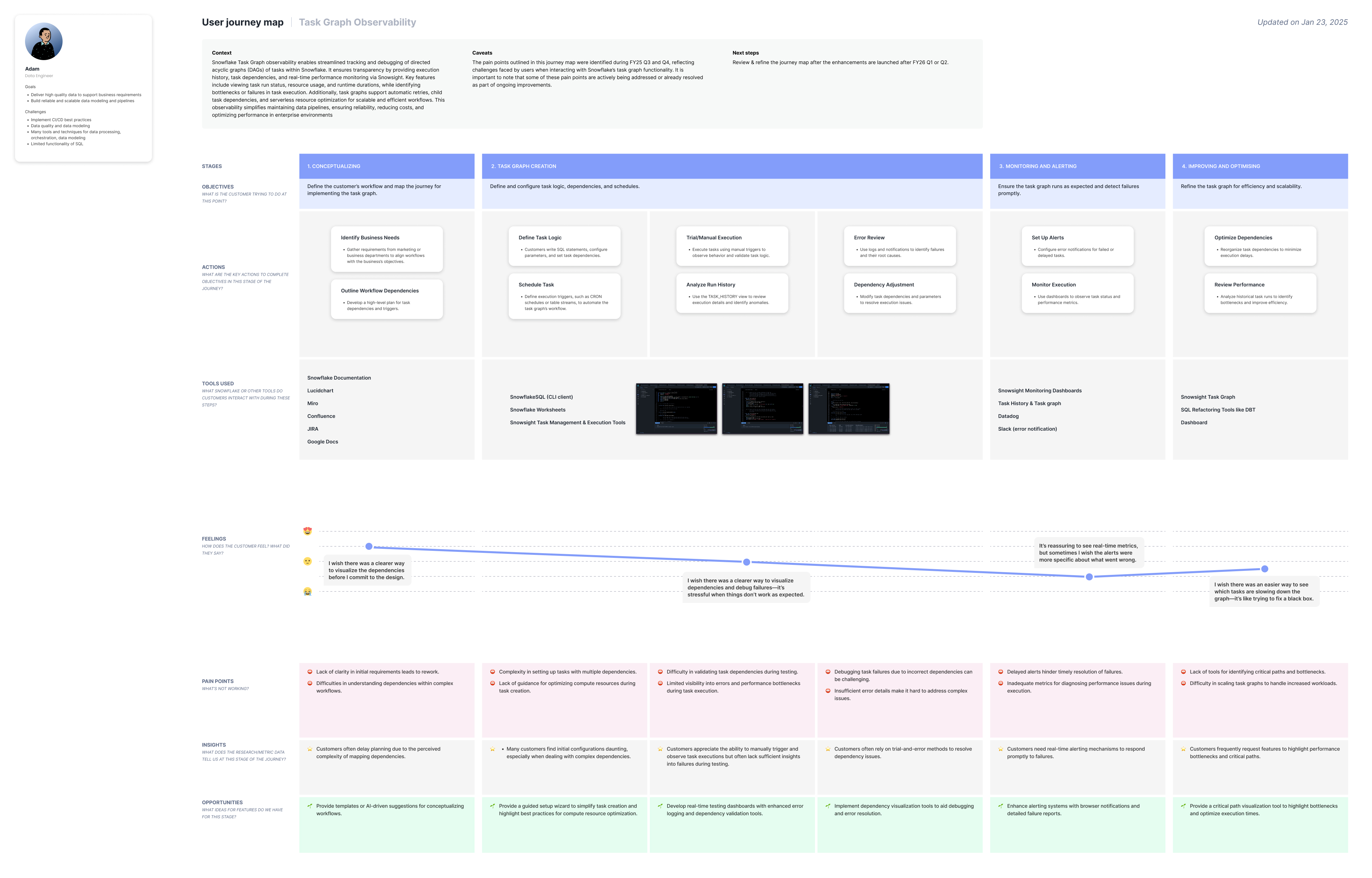

Using these combined insights, I led the creation of the first end-to-end User Journey for Document AI. This artifact clarified behavioral patterns, technical dependencies, and user pain points, providing shared visibility for engineering, PM, and leadership. It became the foundation for all subsequent decision-making.

With the User Journey established, we initiated a co-creation workshop among PM, engineering, and design. The session mapped evidence to impact, helping the Trios identify the most critical part of the workflow. The conclusion was clear: improving the human feedback experience would provide recurring value at every session, while consistency updates—although important—offered diminishing returns in the short term.

Our next challenge was alignment with Directors and VPs. Their OKRs emphasized platform consistency, but our evidence showed that enhancing feedback efficiency would drive greater customer value. In a 25-minute planning review, we used the User Journey to explain the friction points and demonstrate the downstream effects of delayed corrections.

The clarity of the evidence led leadership to approve our proposed direction and grant an exemption from the original OKRs. This outcome emerged through structured reasoning, transparent communication, and the ability to influence without authority.

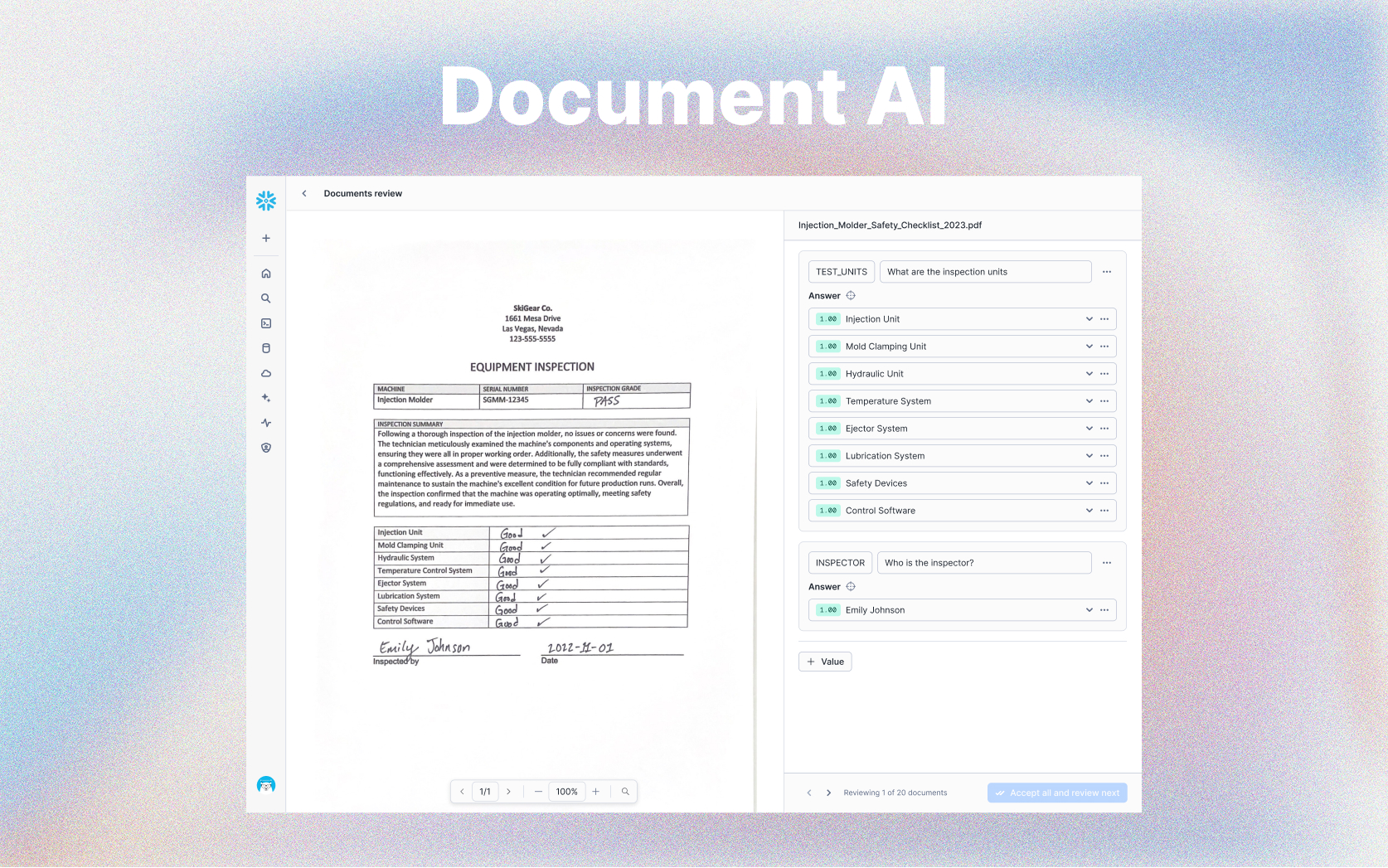

Our objective was to streamline the core feedback workflow, improving both speed and accuracy. The redesign focused on reducing cognitive load, clarifying system behavior, and minimizing context switching during validation. Enhancements included clearer confidence indicators, optimized navigation between questions, in-place editing, and a more scalable layout for long documents.

Engineering measured overall time-to-completion and credit consumption, but these metrics did not isolate the impact of design. I introduced two additional measurements: time per corrected answer and time spent within the feedback loop. These provided a direct view into how UI and interaction changes affected user efficiency.

Combined with model-behavior analysis and workflow restructuring, the redesign successfully reduced friction in the step users encountered every time they used the product—ultimately improving user productivity and strengthening downstream model performance.

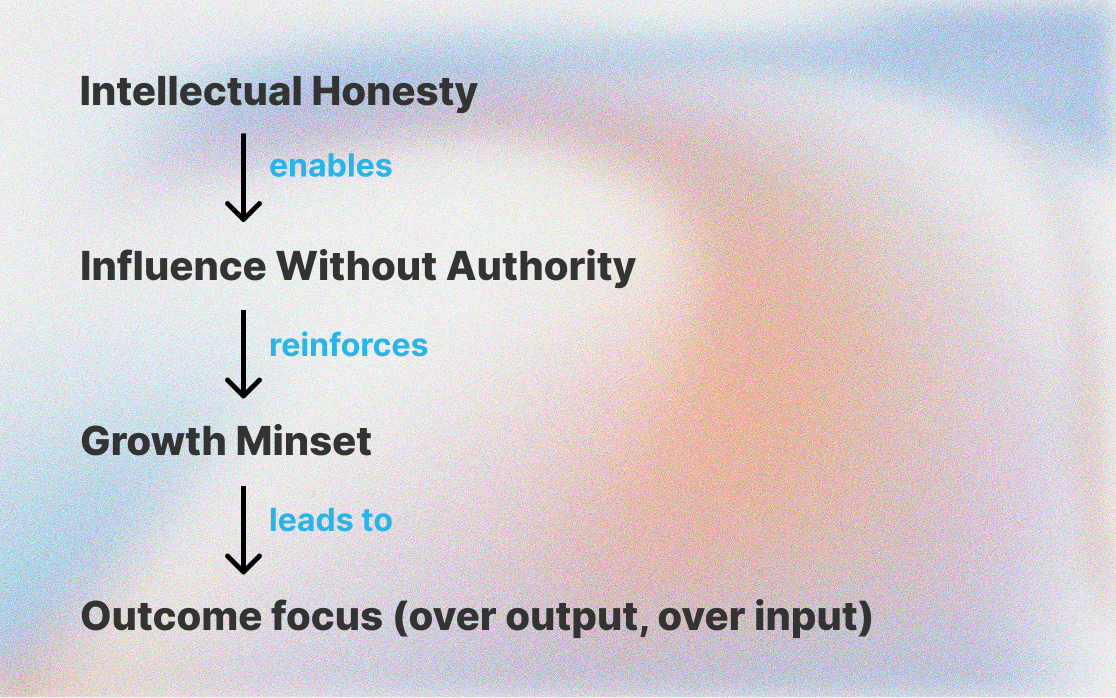

This project affirmed that intellectual honesty is the foundation of my work. It enables me to influence without authority by giving people clarity they can trust, drives a growth mindset by revealing what we must learn, and keeps me focused on outcomes over output, and output over input. By grounding decisions in evidence and aligning diverse teams around what truly matters, I helped shape a direction for Document AI that delivered meaningful impact within Snowflake’s evolving AI ecosystem.